- Prompt Hunt Newsletter

- Posts

- A Brief History of Modern AI

A Brief History of Modern AI

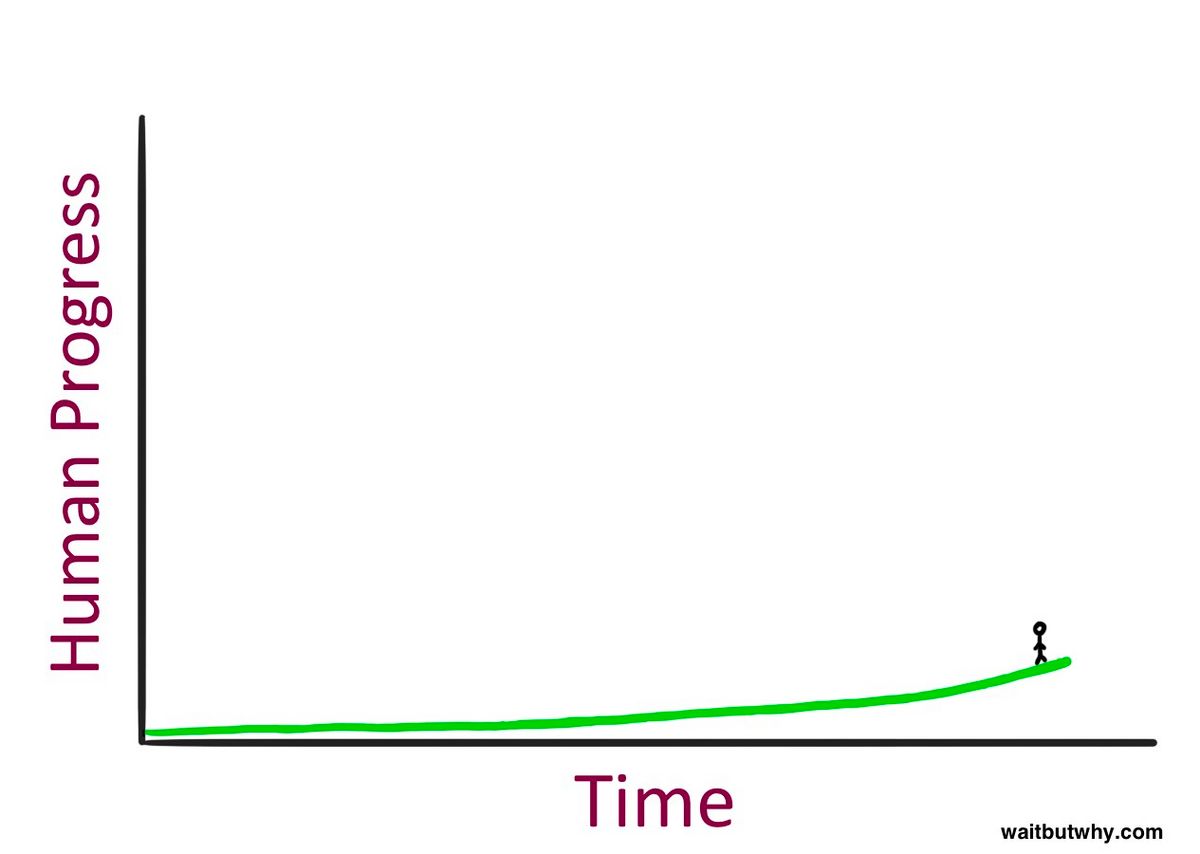

Gradually, then suddenly.

Here's your daily briefing:

These AI generated Nike outfits are taking Twitter by storm. When Ye x DALL-E 2 collab?

AI Generated Nike Outfits (2022)

— Outlander Magazine (@StreetFashion01)

10:44 AM • Oct 23, 2022

This walkthrough of a workflow using Runway ML for generative image creation is pretty cool. Is it wild that we're already getting used to things like this?

Using @runwayml for generative image creation is a game changer. Why? Because you can control every aspect of it from generation, to replacement, and style implementation. Curious? Here's my workflow:

— Always Editing (@notiansans)

6:41 PM • Oct 21, 2022

A recent paper from the giga-brains at Los Alamos National Laboratory shares a novel method researchers have developed for comparing neural networks which allows them to peer into the "black box" and gain insight into the mathematics of how neural networks do what they do.

“The artificial intelligence research community doesn’t necessarily have a complete understanding of what neural networks are doing; they give us good results, but we don’t know how or why."

A crucial part of being prepared for where one is going is having an understanding of where one has been (or so we've heard).

It's in this spirit that we thought we'd take a little Sunday stroll through the (relatively recent) history of artificial intelligence.

While the intellectual roots of AI arguably stretch all the way back to ancient history and the Greeks, we thought we'd save the real archaeology for another day and instead focus on the last 75 or so years.

Science fiction began to introduce the idea of artificially intelligent beings to the general public in the early 20th century. In first novels and then films (with the "heartless" Tinman from The Wizard of Oz and the humanoid robot from Metropolis) the idea of "thinking machines" was planted in the cultural consciousness.

An entire generation of scientists, mathematicians, and philosophers had internalized the idea of artificial intelligence by the 1950s.

One of these individuals was a young British polymath named Alan Turing, who used his intellectual gifts to investigate the mathematical potential of artificial intelligence.

Turing argued that since we humans use accessible knowledge along with reason to make choices and solve problems, why shouldn't robots be able to do the same? His 1950 study, Computing Machinery and Intelligence, which examined how to create intelligent machines, had this premise as its logical foundation.

Turing believed that if a machine could carry on a conversation by way of a teleprinter, imitating a human with no noticeable differences, the machine could be described as "thinking," commonly known these days as "the Turing test."

Five years later, Turing's ideas were explored in a "proof of concept" by Allen Newell, Herbert Simon, and Cliff Shaw called "Logic Theorist."

Logic Theorist was a program designed to mimic the logic and reasoning capabilities of the human mind. Funded by the RAND Corporation, Logic Theorist was presented at the historic Dartmouth Summer Research Project on Artificial Intelligence conference, hosted by John McCarthy and Marvin Minsky, and is considered to be the first demonstration of a running AI program.

While the Dartmouth conference fell short of McCarthy's expectations due to a general incoherence and failure to agree on standard methods for the field, the overall sentiment was positive: this AI thing was achievable.

The significance of that meeting of the minds can't be understated, as it was a catalyst for the next several decades of research. From then until around 1974, the field of artificial intelligence flourished.

Computers were improving, able to store more information and getting faster and cheaper and more accessible as time went on. Algorithms were also improving along with researchers' knowledge of which algorithm was best for which problem.

This intellectual and technical growth led to better demonstrations, such as Newell and Simon's General Problem Solver and Joseph Weizenbaum's ELIZA, an early chatbot.

These demos convinced government agencies such as DARPA that there was a "there" there and led to their foray into funding for AI research. General enthusiasm was high, culminating in Marvin Minsky's 1970 proclamation to Life Magazine:

“From three to eight years we will have a machine with the general intelligence of an average human being.”

But, as is now more commonly understood to be the norm in innovative fields, initial excitement had clouded over serious obstacles.

The biggest of these obstacles was a sheer lack of computational power. Computers simply couldn't store enough information or process it fast enough. Hans Moravec, a doctoral student of McCarthy at the time, stated that "computers were still millions of times too weak to exhibit intelligence."

As patience began to dwindle so did the funding, and research came to a slow trickle for years, known today as "the AI winter."

However, the "winter" didn't fully extinguish the flame of AI.

In the 1980's the field began to be slowly reignited generally because of two drivers: expanding algorithmic proficiency and increased funding.

To the latter point, the Japanese government invested heavily in "expert systems" and other AI related endeavors. Between 1982 ands 1990, they invested 400 million dollars into AI research with the goal of revolutionizing computer processing and programming as well as improving artificial intelligence.

Unfortunately, many of the more ambitious goals were not met, leading to another slow bleed of funding. Nevertheless, the indirect effects of the Japanese' FGCP project inspired a talented young generation of engineers, mathematicians, and scientists.

And the tinkerers, as the tinkerers do, kept tinkering. Thus, even in the relative absence of funding and hype, AI thrived during the 1990's and 2000s.

And then, in 1997, reigning world chess champion Gary Kasparov was defeated by IBM's Deep Blue.

This was the first time a world chess champion had been defeated by a computer and was a pivotal step forward for artificial intelligence decision-making programs. As time marched forward and Moore's Law had a chance to catch up with what we learned about machine learning, the field of artificial intelligence began to explode into what it is today.

Less than 20 years after Deep Blue beat Kasparov, Alpha Go by Google's DeepMind defeated world champion Go player Lee Sedol.

To compare the relative computational ability needed to "think about" chess versus go, consider that after the first two moves of a chess match, there are 400 possible next moves, whereas in go there are 130,000 🤯.

In many ways, Moore's Law offers us a key insight into the roller coaster, peaks-and-troughs history of AI: we stretch AI to the limits of our current computational power, which leads to an inevitable "disappointment" (since expectations can always exceed reality), and then we wait for Moore's Law to catch up to our expectations again, and so on and so on.

And now here we are, in the world of "big data," where our capacity to collect vast amounts of information has gone truly skyward 🚀.

Big data has shown us that even if algorithms were to stagnate, the sheer amount of learning made possible by the increase in data is a huge driver in AI capability. Large language models such as GPT-3 and text-to-image generators like DALL-E 2 and Stable Diffusion show us the incredible power of training an algorithm on an enormous data set.

So what does the future hold for AI? 🤔

That's a big question, with lots of different answers. We thought it might be better to wade into that one in, well, the future.

For now, we leave you with:

"the future world of AI, watercolor"