- Prompt Hunt Newsletter

- Posts

- The New Trolley Problem

The New Trolley Problem

Will new tech force us to answer the oldest questions?

Here's your daily briefing:

We played around with Looka, which looks (ha!) like a pretty great resource for AI generation of logos and branding ideas. What are your thoughts on these? We like the bottom left:

2. Looka

Why spend hours designing your own logo and branding when Looka can handle all that for you?

It's powerful AI takes into account your industry and design-style to put together a logo that suits exactly what you need.

👉 looka.com

— Nikki Siapno (@NikkiSiapno)

9:28 AM • Oct 24, 2022

You know things are getting exciting in some area of tech when the New York Times writes about it in a non-completely-dismissive way. This article about Stability AI's recent "coming out" party is a good read which fairly (if shallowly) lays out the different perspectives about the benefits/risks of open-source AI. It was worth the read alone for this gem from Stability founder Emad Mostaque:

“So much of the world is creatively constipated, and we’re going to make it so that they can poop rainbows.”

With so much happening so fast in the generative AI space, it can be difficult to keep track of who's doing what in what niche/area. This thread by Sequoia partner Sonya Huang is a great map of this "emerging frontier":

Introducing the @sequoia Gen AI Market Map!🌎 We’ve decided to map out this emerging frontier, thanks to all the contributions and feedback we’ve received.

This space is moving quickly – this map is a living document, so keep the suggestions coming! Who else should we include?

— Sonya Huang 🐥 (@sonyatweetybird)

4:05 PM • Oct 17, 2022

The world of artificial intelligence is exciting because of its implications for our future (duh) and all of the new questions it forces us to ask.

But what's less widely appreciated is how AI forces us to ask very old questions in new ways and with new urgency.

Questions like:

What does it mean to be human?

What is consciousness?

What is intelligence?

What is creativity and how does it work?

How do we make decisions?

🤔

Before AI, many of these questions could be relegated to the smoke-filled dorm rooms of philosophy students or the papers of cognitive scientists, neuroscientists, and psychologists. It's not that they weren't important before, it's just that they weren't necessarily urgent.

But as AI continues to proliferate into every facet of our lives, doing more and more of our "thinking" for us, we're re-confronted with these profound questions in newly practical and urgent ways.

In the most general sense, think about it this way: to program an AI (or eventual AGI) to give us what we want, we have to know what we want.

Turns out that knowing what we want, i.e. defining the problem and solution, is half the battle in designing AI tools and products.

It's one thing to jawbone about what users of a social media platform "want" or how to design the ideal solution to the "problem" of getting people to buy more things they don't need.

But what about when the questions AI forces us to ask become much more consequential?

What happens when the question becomes: Who dies?

Because we're not sure if it's occurred to you yet or not, but this is now an actual philosophical problem that has become a practical and technical problem.

Why?

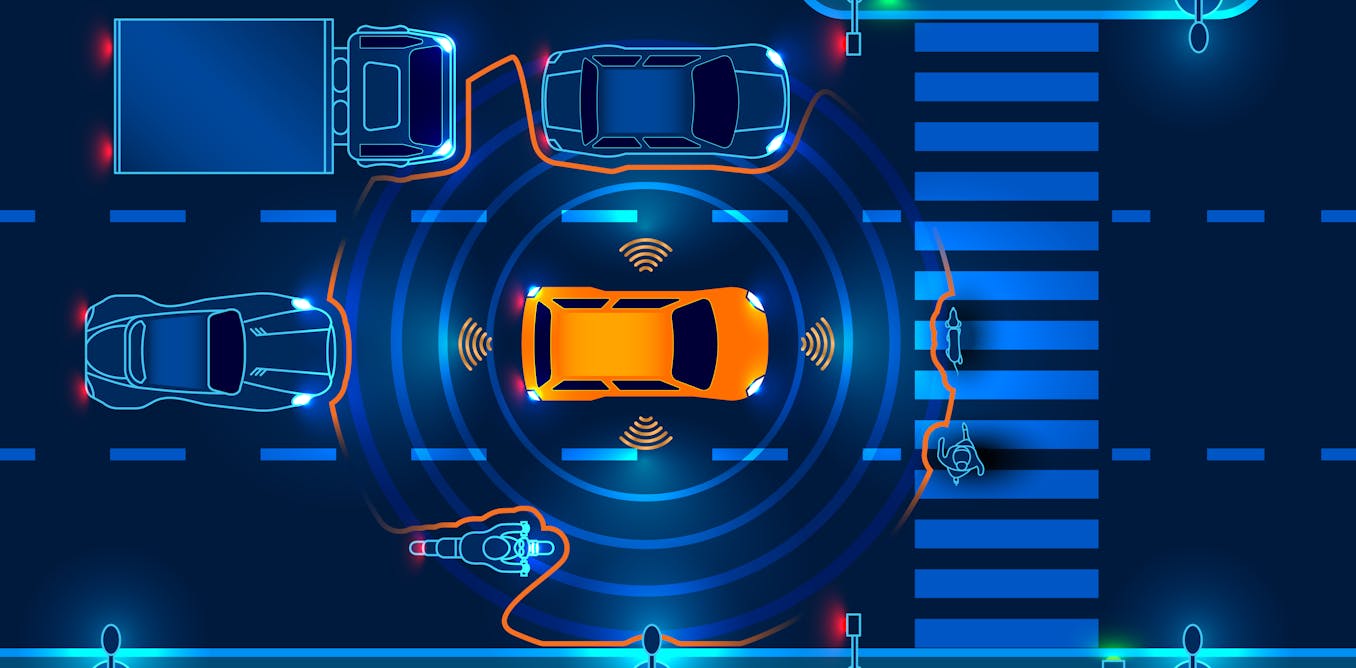

Hmm, maybe you've heard of these crazy things called autonomous vehicles?

Of course you have.

With the continued success and proliferation of Tesla and other electric vehicles, the discussion around self-driving cars has become more and more mainstream. And while Elon Musk may be criticized for the wobbliness of his timelines on when we'll have level 4 or 5 autonomous vehicles, we all know that their eventual arrival is inevitable.

Which means that one of the most famous philosophical thought experiments of all time has now become one of the most famous practical problems of our time.

Enter: the trolley problem.

Stated simply the trolley problem is as follows:

There is a runaway trolley barreling down the railway tracks. Ahead, on the tracks, there are five people tied up and unable to move. The trolley is headed straight for them. You are standing some distance off in the train yard, next to a lever. If you pull this lever, the trolley will switch to a different set of tracks. However, you notice that there is one person on the side track. You have two (and only two) options:

1. Do nothing, in which case the trolley will kill the five people on the main track.

2. Pull the lever, diverting the trolley onto the side track where it will kill one person.

Which is the more ethical option? Or, more simply: What is the right thing to do?

Most people acknowledge that the trolley problem is an interesting thought experiment about the role of choice and responsibility and the "value" of human life.

But how many of us realize how often life itself presents us with trolley problem like situations?

The situation from the article above happens all the time on our roadways.

Whether it's a pickup truck that slammed on its breaks or, god forbid, a child who has run after a ball into the middle of the road, human drivers are confronted every day with situations where a choice must be made in a split second about which of several undesirable alternatives is best.

Do you hit the truck? Do you swerve to the left and hit a tree? Or do you swerve to the right and hit a pedestrian?

In situations like this, we humans make a decision but often we don't know why we choose what we do. Since these life-or-death decisions are often made so quickly that our conscious minds don't have a chance to decide, it's our unconscious that's doing the deciding.

"Until you make the unconscious conscious, it will direct your life and you will call it fate."

But now we have to make all of these unconscious calculations conscious and explicit and code them into algorithms for autonomous vehicles to use.

This obviously raises a lot of questions about human value and bias and morality, as well meta-questions like who we should even be allowing to answer these questions.

Should Tesla be able to decide which moral weight or human value to give people?

Should the government? Should the driver?

Or should it be AI who decides?

These are big questions and this is a big discussion. For some more interesting and in-depth perspective, check out these pieces:

For now, we leave you with this:

"an AI powered robot thinking while holding the lever from the trolley problem":